Get in Touch

- Phone

+991 - 763 684 4563

- Email Now

info@examplegmail.com

Canada City, Office-02, Road-11, House-3B/B, Section-H

In the rapidly evolving digital ecosystem, implementing effective security measures for application development has become paramount. The OWASP Top 10 list has emerged as a cornerstone resource in the cybersecurity landscape, providing essential guidance on critical web application vulnerabilities. This guide helps developers, security experts, and organizations identify and address major security concerns.

Security professionals rely on the OWASP Top 10 as a vital tool for security assessments, enabling them to focus on the most pressing vulnerabilities. Development teams use it to create secure coding practices, while organizations leverage it to establish robust security frameworks and implement effective controls.

Recognizing the rise of web services, OWASP developed the 'OWASP API Security Top 10' to address the unique challenges and threats faced by APIs. This guide focuses on securing API implementations and protecting against API-specific vulnerabilities.

The software development landscape is transforming with the adoption of Large Language Models (LLMs). This revolution introduces an unprecedented fusion of natural and programming languages, creating exciting opportunities and novel security challenges that require innovative protection strategies.

Among emerging threats, prompt injection stands out as a significant concern. This sophisticated attack involves carefully crafted inputs designed to manipulate LLM responses, potentially leading to unauthorized operations and security compromises.

While prompt injection garners significant attention, it represents just one aspect of LLM security. A broader perspective is necessary to address interconnected security risks and safeguard LLM systems effectively.

The OWASP Top 10 for LLM applications offers a structured approach to tackling security challenges. Here's a closer look at key vulnerabilities:

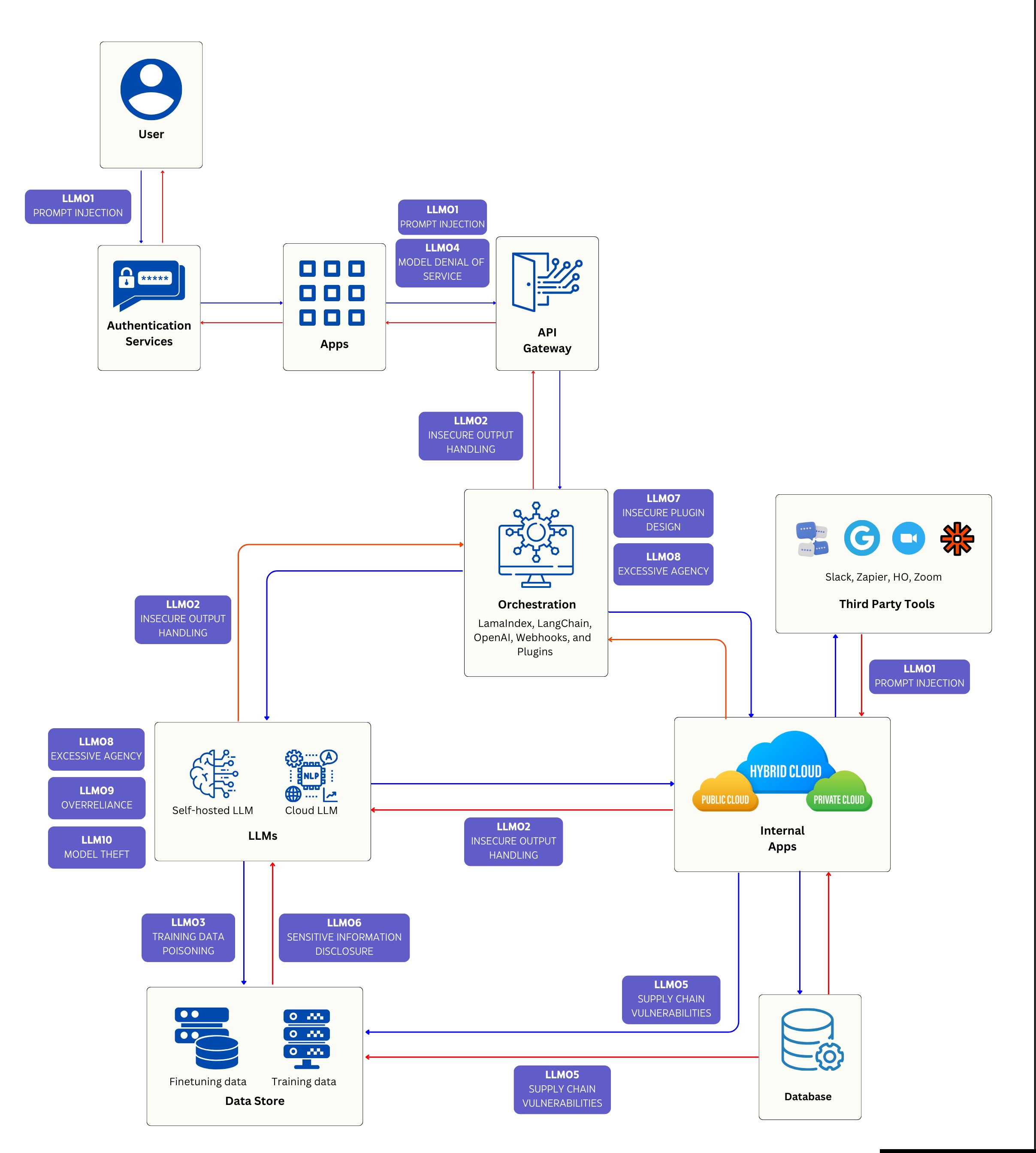

Integrating LLMs into enterprise systems introduces new attack vectors. Understanding these vulnerabilities is essential for implementing effective security measures.

To better understand how vulnerabilities manifest in real-world applications, comprehensive diagrams map potential attack vectors onto enterprise architectures. These visualizations help security teams identify and protect vulnerable points.

The OWASP Top 10 has profoundly impacted web application and API security. As AI-driven development gains momentum, the OWASP Top 10 for LLM applications is poised to shape the future of AI security practices.

In today's enterprise landscape, where LLMs play a central role, adhering to robust security practices is crucial. This includes adopting "shift-left" security approaches and maintaining vigilance against evolving threats in the LLM space.

As LLM technology evolves, its security implications will require ongoing attention and adaptation of practices to address emerging challenges.